GUIDE7 juli 2024

UIKit based apps for Spatial Computing

Porting already existing UIKit based iOS applications to Apple's latest addition to the device family, the Apple Vision Pro.

When people talk about Apple these days, most of the conversation revolves around the Apple Vision Pro that Apple announced at WWDC this year. Here, Apple introduced the wonderful world of Spatial Computing and all its possibilities. Of course, according to Apple, it is ideal to develop apps for the Apple Vision Pro using SwiftUI. When starting to develop a greenfield app, SwiftUI is the way to go these days. But what about the thousands of UIKit apps in that are already living in the App Store? Will it also be possible for them to offer a visionOS variant of their existing codebase? If so, how difficult is it to make an existing UIKit app available for Apple’s latest platform?

We will find out!

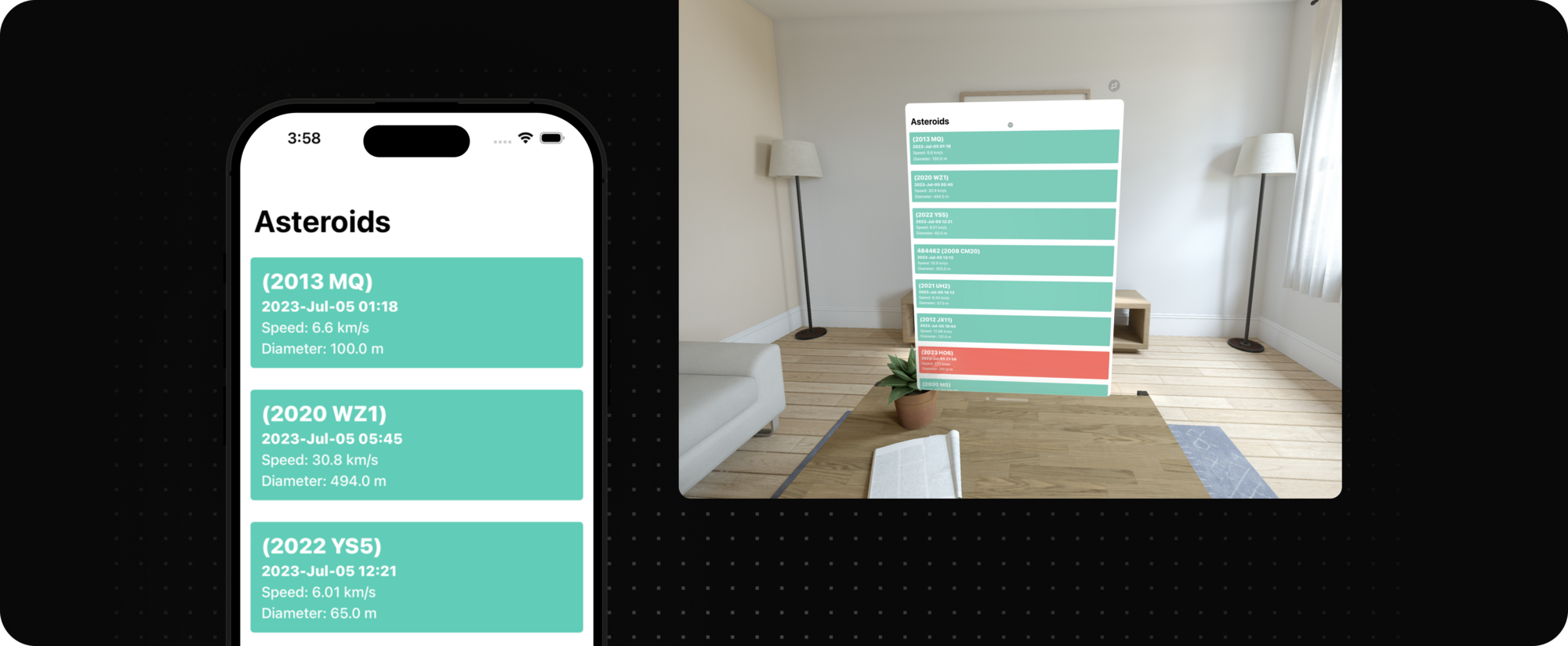

To guide us through this adventure we use an already existing UIKit based application of ours. This application lists the asteroids that will pass the earth in the near future and whether they are potentially threatening or not.

Plug n' Play

When opening an existing UIKit based application in Xcode 15, which includes the visionOS SDK in beta 2, the application will run fine and as expected on the iOS 17 simulator. Among the available simulators, the Apple Vision Pro simulator is already available. A side note is that this simulator is designed for iPad. In this, any application that can be run on an iPad, can be run on the visionOS simulator. The new visionOS SDK is not yet used to build this application, but the iOS SDK still is. The application will appear in a window in the Shared Space as it works on iPad. There is a button in which the window can be put in landscape but dynamic resizing is not supported here.

BOOM! We have our UIKit based application running on the visionOS simulator.

UIKit native visionOS applications

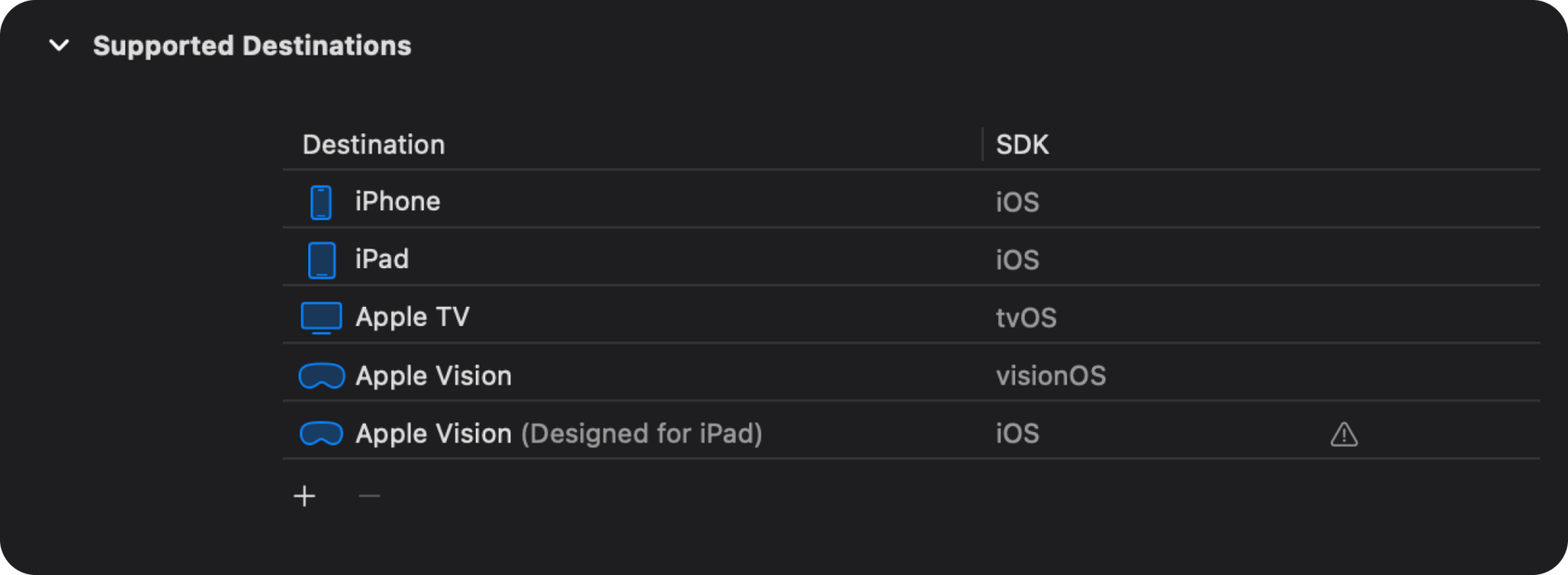

To cast an existing UIKit application to a native visionOS application, Apple Vision must be added as a run destination to the Supported Destinations on the main target.

Trying to build the application for the first time making use of the visionOS SDK might result in compile errors. API’s that don’t translate well to this new platform and API’s that were deprecated prior to iOS 14 will not be supported when building for the new visionOS simulator. This might indicate that the software development of this new platform did go into the final stage mid 2020, when iOS 14 came out.

UIDeviceOrientation and UIScreen API’s are examples of API’s that do not translate well to this new platform. Using the device in multiple orientations will not apply for Vision Pro, as well as a representation of a hardware screen. Also, tabbars have a whole other style of presenting itself in visionOS. They will not be placed leading to trailing but floating around either in or even on the edge of the application with a significant overhang. Therefore, leadingAccessoryView and trailingAccessoryViews are not available when run on visionOS. When building for iOS or iPadOS, this code must of course still be executed. That can be achieved conditionalising this part of code at compile time.

1#if !os(xrOS)

2 // Operations that do not apply to visionOS

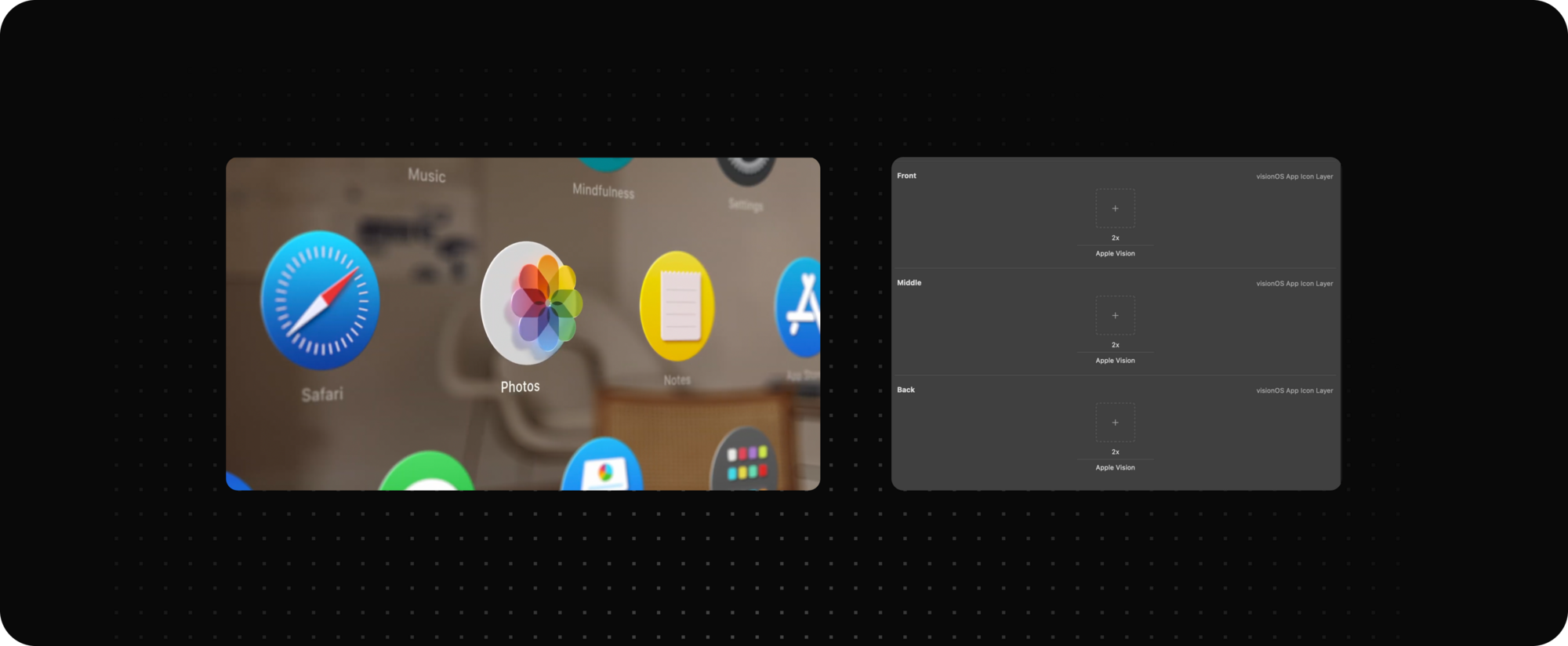

3#endifLet's start by getting the visibility of our app ready for visionOS. As we could see, Apple uses three-dimensional app icons on the home screen for its native visionOS applications that show a depth effect when hovered over by means of eye tracking. Apple has made it easy for developers to achieve this 3D effect. Uploading a visionOS app icon is done in three layers. These layers lie on top of each other as a 2D image when not in focus, but get a small gap when they do enter the focus area. Important to mention here is that the "cursor" on visionOS is your eyes. The device uses advanced eye tracking to determine the exact position where the user is looking at the Shared Space. That is why looking at an app icon will trigger this hover effect.

visionOS is generous

After conditionalising out parts of the code that do not apply to this new platform and moved off from deprecated API’s, it is now time to run our UIKit based application in the Shared Space.

The first thing you will notice is the glass background of the application. This happens by default when using a UINavigationController or a UISplitViewController. Overriding this behaviour is possible by overriding the new preferredContainerBackgroundStyle property on a UIViewController to return .automatic, .glass, or .hidden.

1class TestViewController: UIViewController {

2 override var preferredContainerBackgroundStyle:

3 UIContainerBackgroundStyle {

4 return .glass

5 }

6}

When hovering over the UITableViewCells of the application, the cell gets highlighted. That is behaviour we have never defined ourselves and does not apply in applications built for iOS or iPadOS. Again something we get for free when building a native visionOS application and running it in the Shared Space. This hover behaviour is configurable for each view by setting a new new UIKit property called hoverStyle. This can either be set to .highlight, .lift or nil if no hover animation is appropriate in a certain context. Leveraging this behaviour even more is possible by making use of the new UIShape API and shape the hover effect as desired

By adding all these default behaviours such as hover effects and materials by default to native visionOS applications, Apple encourages all developers to use system components to provide users with the most complete user experience. Other factors such as the distance of the windows and the background lighting that bleeds through the glass background in a Shared Space make it more important than ever to make use of semantic colours and fonts. Semantic colours and fonts adapt differently to all Apple platforms. Instead of hardcoding colours values, the smarter approach is to make use of the system-provided colours. These system-provided colours have slightly different shades on different platforms in dark or light appearances. For example systemBackgroundColor is more vibrant by default when it is placed on top of the glass background. Also, making use of semantic system fonts will make sure the font size will adapt to the set Dynamic Type and keep the text readable for everyone making use of your application.

Conclusion

While the visionOS simulator still feels a bit buggy and slow (and besides it crashed on the first launch 😬), Apple has done a good job of making existing UIKit applications available on visionOS.

As a developer it felt like it was no more work than the annual Xcode upgrade and the mandatory API updates. You can of course go as far as you want in polishing the app for this new platform, but running an existing app in the visionOS simulator worked seamlessly. This research does not consider third party libraries as they also need to comply with the new API’s and adapt their codebase so that it can be built for visionOS. But this is something that is not in our hands.